- September 24, 2022

- Posted by: Author Anoma

- Categories:

“ Predicting the future isn’t magic, It’s Artificial Intelligence ’’

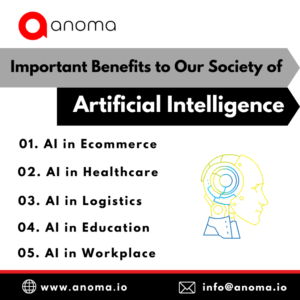

AI Accountability – Anoma Tech is profoundly transforming and affecting human lives and businesses. Nowadays, automated machines make critical decisions in all aspects of life. For example, when it comes to hiring, some organizations solely rely on AI systems and chatbot recommendations. In contrast, judges are increasingly turning to AI algorithms to make decisions in the legal arena. Many of these AI decisions are difficult for humans to understand, and the results of AI-based decisions are not always fair.

As a result, it is critical to ensure that AI systems are developed and deployed ethically, safely, and responsibly. Any failure to implement accountability into AI systems will result in significant harm to businesses, individuals, and society as a whole. Let’s look at how to incorporate accountability into AI services.

An Overview of Responsible AI –

Artificial intelligence not only provides enormous opportunities for businesses but also enormous responsibilities. AI system output will have a direct impact on people’s lives, raising serious ethical, data governance, trust, and legal concerns. The more decisions a company entrusts to AI, the greater the risks it faces, including reputational, employment, data privacy, and safety concerns — this is where Responsible AI comes in.

“ Emotions are an essential part of Human Intelligence. Without Emotional Intelligence, Artificial intelligence is incomplete ’’ — Amit Ray

It is a method of designing, developing, and deploying AI with the goal of empowering employees and organizations while also having a fair impact on consumers and society, allowing businesses to build trust and confidently scale AI.

Many businesses are developing high-level guidelines for developing and deploying AI technologies. Principles, on the other hand, are only useful if they are followed.

The following is a breakdown of the entire AI lifecycle:

1. Design — Designing the system entails defining its goals and objectives, as well as any fundamental assumptions and basic performance criteria.

2. Development — Establishing technical requirements, gathering and processing data, developing the model, and testing the system are all part of the development process.

3. Deployment — Testing, ensuring regulatory compliance, assessing compatibility with other systems, and analyzing user experience are all part of the deployment process.

4. Monitoring — This entails reviewing the system’s outputs and impacts on a regular basis, revising the model, and determining whether to extend or deactivate the system.

The Four Dimensions of Accountability in Artificial Intelligence

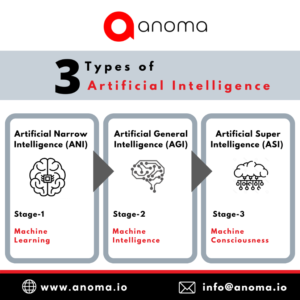

Accountability establishes responsibility throughout the AI life cycle, from design to deployment and monitoring. It also rates AI systems based on four criteria: governance, data, performance, and monitoring.

1. Examine the governance structure:

Governance processes are critical for AI Accountability – Anoma Tech. Appropriate AI governance can help with risk management, ethical values demonstration, and compliance. Accountability for AI entails looking for clear goals and objectives for the AI system, well-defined roles, and lines of authority, a diverse workforce capable of managing AI systems, various stakeholder groups, and organizational risk-management methods.

“ The purpose of Artificial Intelligence is to re-engineer the human mind ’’ — Chris Duffey

2. Examine the data:

Data is at the heart of AI and machine-learning systems in the digital age. The same data that gives AI systems strength can also be a source of weakness. It is critical to document how data is used at two stages of the AI system: when developing the underlying model and when it is in use. Reporting the sources and origins of data used to build AI models is an essential component of good AI Accountability – Anoma Tech

3. Set performance objectives and metrics:

After developing and deploying an AI system, it is critical not to overlook the significance of questions such as “why did you develop this system?” and “how is it working?” Businesses require detailed documentation of an AI system’s declared objective, as well as definitions of performance indicators and methodologies for evaluating performance, to address these critical concerns.

Management and those in charge of reviewing these systems must be able to ensure that an AI application meets its goals. These performance evaluations must focus not only on the overall system but also on the various components that support and interact with it.

4. Examine monitoring strategies:

Artificial intelligence should not be viewed as a panacea. Many of AI’s advantages stem from its ability to automate specific jobs at scales and speeds far beyond human capability. This includes determining an acceptable range of model drift and monitoring the system on an ongoing basis to ensure that it produces the desired results.

Long-term monitoring should also include determining whether the operating environment has changed and whether the system can be scaled up or expanded to new operational settings.

The overall framework specifies procedures for each of the four dimensions mentioned above (governance, data, performance, and monitoring). Executives, risk managers, and audit experts, in fact, anyone working to ensure accountability for an organization’s AI systems can use this approach right away.

“ You may not realize it, but artificial intelligence is all around us ’’

AI Accountability – Anoma Tech

At Anoma tech, We employ the most talented top-tier resources in the USA and also across the globe primarily in Egypt, Canada, LATAM, UAE & India.

We have supported not only the existing code base but also built the core frameworks from scratch. Anoma Tech Inc. provides services in a wide variety of technologies which also include Mobile product development, Web Development, Quality assurance, and DevOps tech stacks for all scales of companies.